A presentation to the Columbia, MO GDG developer meetup at Quarkworks on how to get started with Google Cloud.

Video • Slides

Introduction

I’ve been doing a lot of Google Cloud in the last few months. A few years ago, I was doing AWS. I’ve done a lot of unix over the years on various different platforms, and in the process of getting up to speed on Google Cloud, I’ve learned a lot. By extension, I thought I would give you a brief introduction of the platform. It’s a complicated subject, but I’ll try to help you get to speed as quickly as possible.

We’ll do an overview of Google Cloud and we’ll look at Google’s Colab, which is like a notebook style tool for doing cloud computations. We’ll look at their deep learning AMI, which is a very quick and easy way to build up a deep learning, machine learning project. We’ll do a demo with the TPU, which is Google’s Tensor processing unit, which is one of the fastest machine learning platforms in the world. We’ll do an easy demo and then an advanced one, and then finally, we’ll go through some of the various random stuff that I’ve hit while trying to learn the platform, and hopefully save you the time of messing with that.

About Google Cloud Platform

So what is Google Cloud platform? Basically, this is Google’s tools for building Google. Many people think that Google has its own set of secret libraries and secret sauce, but the truth is that whenever you log into the Google Cloud console, that’s basically 95% of what Google engineers are actually using every day. If your long term dream is to work for Google, just learning Google Cloud platform is the fastest way to get there. There’s no secret sauce to tell the truth. As a result of running Google, these tools are battle hardened and they work on Google’s scale. You can put billions of users on these things, everybody and their brother are trying to hack everything, and so much of the security stuff has already been thought through 13 different ways. As a result, if you can use their platform, you can get the benefit of all this work that other people have done. The flip side of this is that at Google, most of the projects are set up a certain way. So it’s an opinionated system for building tools. This means that if you’re used to doing things a certain way, and the Google system is designed differently, then you need to either rethink how you’re doing things or try to jury-rig something — but at which point, you’re going off into the weeds. So this is something that people get frustrated about because Google doesn’t necessarily let you do things the way you like to do things, but the flip side is that if you follow their process, you’ll be within their sandbox, and then you’ll get the benefits of all this other stuff. So, one of the big things that the Google Cloud platform gives you is user authentication — you can do one log in to the Google system. You have one system over here, and one system over there, but they have a single shared log in system. Then broadly, the Google Cloud platform is really just Unix. So if you know Unix, then you know this already. But if you don’t like Unix…well, that’s all it is.

Two Tricks to Know for Colab

This is the first super easy trick that I think everyone can pick up. This is Google’s Colab, which is very much basically Google’s version of Jupyter Notebook. Google runs this, and they have free GPUs and TPU time, so you can just run your stuff for free in the cloud for 24 hours. If you can do basic Python, you can do this stuff. This is all completely free to use, and we’ll do a 5 second demo of it. When we run it, it runs up in the cloud and outputs the result. There you go, a print statement. A couple years ago, I did this demo from one of my talks. This is a machine learning demo. We’ve copied and pasted that code from my old project into the new one, and we will now run it. If we scroll down, it’s training a very simple neural network to do machine learning on the MNIST data set. We’ve just built a neural network model running in the Google Cloud. Basically, you can find all this code on the internet and just run it for free. A lot of these libraries and stuff can be a pain in the butt, but this just abstracts it all away.

The second trick that I think you should know for doing this Google Cloud stuff is basically, many people have already built pre-configured machines — so if you’re familiar with Docker, Kubernetes, these sort of tools, there are pre-built machines and all you have to do is simply run one. To give a good example — we will create an instance, this is the basic drag and drop interface. We can click that button there, and now we can make a server that has 3.7 Terabytes of RAM, just like that. From the same concept, Google has already set up this whole marketplace of solutions. We search for deep learning, this is the Google default image right here, click here. You can configure it to have a different GPU. This is the Tesla T4 GPU that came out last fall. Then right here, if you’ve never had to set up one of these libraries, it’s a one click button. No work, and you have a very nice starting point for building a workflow.

Q: What’s the difference in functionality between Google Cloud and AWS? Do you have a preference for which would be best for a server side back end?

A: AWS is more oriented towards a sort of commoditized version of all this stuff. I would honestly prefer Google Cloud right now, having done this for the last year. I think Google Cloud is cheaper for a lot of things. Google Cloud does not have as many features as AWS, but on the flipside AWS will often give you five ways to do things and try to make them all work together, and you just need one. In theory, the price is about the same, but I would say in practice Google Cloud often ends up being cheaper.

So we hit this button, and it’s deploying a new machine in the cloud. In a couple minutes, we’ll have a virtual machine for running TensorFlow. This is using the new TensorFlow 2, which is completely bleeding edge, we’ll say. Someone at Google has packaged that up neatly and nicely for us. We’ll come back to this one here in a second.

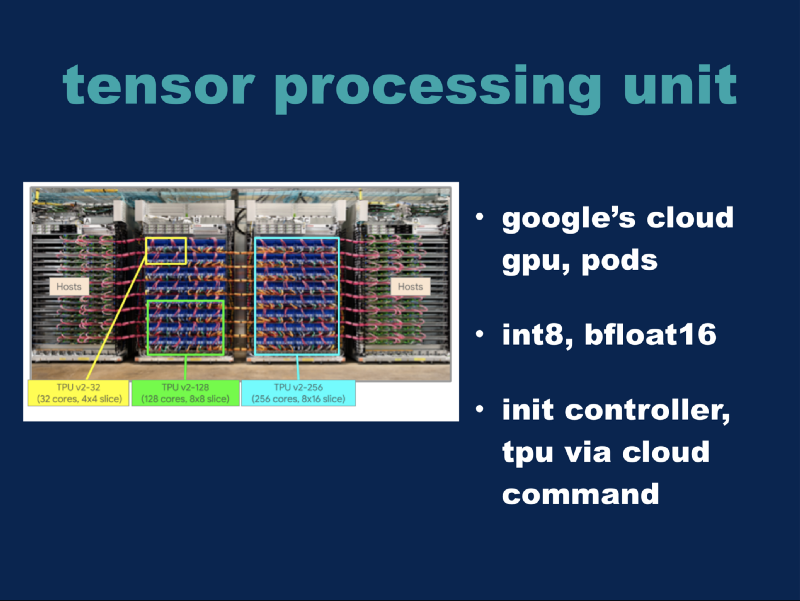

A Look into Tensor Processing Unit

Now we’ll look at the tensor processing unit, or TPUs. This is Google’s cloud GPU that they made themselves, it’s custom hardware but you can access it via the Google cloud console. They support some different data types. I did a talk last fall (Tensorflow and Swift) where we went deeper into these data types. In this picture, there is a full rack of these things — this is a group of 4 TPU-2s, and in this whole cluster is a cluster of 64 TPU-2s, and this whole thing is roughly somewhere around 100 petaflops of processing power. The important thing to note about this picture is that these right here are the TPUs themselves, they’re like a fancy GPU. These things over here are the computers that control them. The way you actually use a TPU in the real world is you create a controller computer, and then you have a TPU that’s bound to that controller computer so that the controller computer issues commands to the TPU, and they talk to each other through the Ethernet cable. If you’ve done traditional machine learning with regular devices, the crucial difference is you often have to deal with this whole networking layer, which adds a layer of complexity. We’ll see here in a second how it actually works, but that’s the key concept if you want to mess with TPUs.

Using MNIST

We did the MNIST before, just using Keras as a basic demo. Now we’ll do MNIST again, but we’ll run it on a TPU in the cloud. The first command you’ll run is ctpu up. This will create the virtual machine that’s going to control your TPU, as well as register a TPU for your use. You’ll run this command — the problem for the demo is that this takes 5 minutes for all this stuff to get going. I did it 20 minutes ago so you wouldn’t have to sit through that part. Now we’re logged into a computer which is then connected to a TPU.

Now we’re setting a place for the data to be stored in after it gets run. Now we will run it… It takes a few seconds to get going, but the local computers are talking here. It’s connecting to the remote computer, and then if we wait another 30 seconds or so, it will all start to work together. Now, we’re actually training the MNIST data set using the TPU in the cloud. Now we’ve done everything we did with Keras before, but we did it with a cloud supercomputer. It runs a test afterward… Now it’s finished and if we go back over here and look at our results — we saved it to the Google Cloud. It’s just a basic demo of doing machine learning in the cloud.

We’ll say that the Keras demo was beginner level, and the one we just did is intermediate level. It’s all command line, but conceptually it’s the same thing.

Resnet Demo

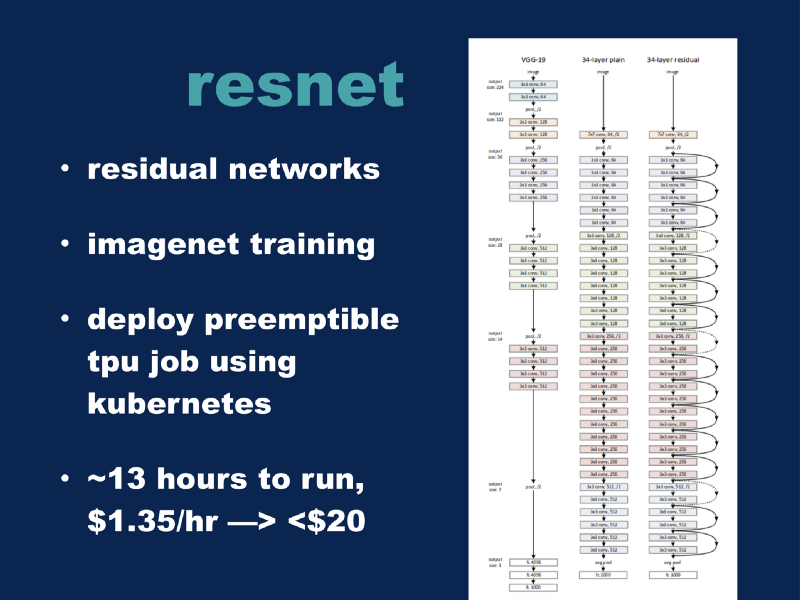

Now we’ll do an advanced demo. Resnet, or residual networks, are a computer vision network. We’re going to train it now on the ImageNet data set, which is a larger data set. MNIST is about 100 mb of data and ImageNet is about 150 GB of data. We went from 10 categories for MNIST to 1000 categories for ImageNet, and finally the third trick we’re going to do is we’re going to add Kubernetes to this whole mix. Before we had a single controller computer, and now we’re going to have a ring, a cluster of three computers that are the controllers for the TPU nodes, we’re going to add a batch job to the Kubernetes cluster which will then spin up a TPU instance in order to run the whole ResNet ImageNet training demo.

The process of actually creating a Kubernetes cluster is nothing more than running this command:

gcloud container clusters create tpu-models-cluster \

–cluster-version=1.10 \

–scopes=cloud-platform \

–enable-ip-alias \

–enable-tpu

I did that already; it takes about 10 minutes to run. Then we’re going to run this Kubernetes job file. I took the default for the TPU demo and modified it slightly. Here’s where I have the data, and here’s where it’s going to save its results. Other than that, everything is pretty much the same. Then, finally we create this job. We submit it to the cluster, and now the cluster will attempt to create TPU pod in order to run it. Here’s the TPU that is spinning up to run this thing. This will take about 5 minutes, and then it will run the job. I’ve done this already; we’ll jump to the end results.

Reasons for Using This Process

Why would you want to use this process? The strength of this approach is that the cluster of Kubernetes nodes is self-healing. These are some other things that are running right now, and they’re doing jobs. But we can take one of these servers and delete it. So that’s being shut down right now. If we stand here and wait for a few minutes, we’ll see that the cluster detects that the computer died, and it will basically re-spin up a new machine and restart doing the job again. See, it’s already figured out that the machine died and it’s creating a new one. This gives you a lot of resiliency for your jobs and scheduling, because you don’t have to micromanage things. The cluster can take care of it for you. Our ImageNet will run for a while, and we’ll get a set of results like this. It will output the whole model. This particular configuration will take about 12–14 hours to run. Part of what we’re doing is using preemptible TPUs, which means that Google charges us about $1.50 per hour to run these machines. So doing this full ImageNet run will cost you under $20. So as recently as a couple years ago, doing something like this would cost about $1000, and nowadays for the price of a pizza, you can get it done yourself. This is the current state of the art for ImageNet training in the world. You can see that by utilizing the methods I just showed you, you have costs comparable to the 1st and 2nd best results in the world for training a public cluster on a public cloud using off the shelf tools, with nothing more fancy than what I just showed you.

The remainder of this is basically some random Unix stuff I’ve run into while doing all this stuff. Most of the Google Cloud infrastructure is based around Python 2, so if you’re used to using Python 3, you may have some fun getting your workflows working in the cloud with that whole system. Most of the Google Cloud infrastructure is built around Debian, but then a lot of the deep learning is built around Ubuntu. As somebody who has spent a lot of time with Unix in the past — one of the quickest ways to go mad is to try and trouble shoot something in between two ever so slightly different versions Unix. [laughter] My basic advice to you is to make your development machine identical to whatever is running in the cloud, and then there is no way that libraries can come and stab you in the back. Some of the sudo tools are kind of weird with Google Cloud, so that may not all work the way you’re used to doing it. Just FYI.

Fundamentally, all these Google Cloud tools are scriptable and API-able. To use the example before of creating an instance — you can copy and paste this and it will give you the commands. So copy paste and say you need a new server, paste that in your command line and fire it off. In the same concept, you can modify the other parameters, say if you only want a certain type of thing or whatever, you can just find that parameter. Literally everything in the Google Cloud console is scriptable like this. There’s that little REST button or console input someplace. Anything you can do, then it is trivial to take it and convert it into a script or cron job or something to that effect.

I’ve not demoed Tensorboard, but that’s a good tool for tracking machine learning jobs. Bigtable is Google’s version of SQL. Pub/sub is a pattern for doing event scheduling. Beam is kind of the new hotness. I don’t know if many people have seen it, but you take the first two concepts (Bigtable + pub/sub) and combine them together, so you have a sophisticated batch processing pipeline for dealing with data. If you’re dealing with lots of data processing, and batch processing, I think you should know that. Stackdriver is Google’s tool for dealing with logs. Tracking, and making sure your logging is correct. They have this app that lets you connect to all your servers. Let’s take our TensorFlow machine that we started before and we’ll delete it. So now we’ll wait a few seconds, and now our server is being deleted. That’s pretty cool to me.

Google has more advanced tools, computer vision tools. You can take a picture of your cloud and it will tell you what’s in it. They have NLP language tools, you can send text up the cloud and it will attempt to make sense of it. AutoML has this new thing where you can push data up to the cloud and it will try to find the best models, so you don’t even need to understand how all this machine learning stuff works. This is all the cutting edge future stuff.

Conclusion

If you’ve enjoyed what I’ve done here, you can go through the TPU demos. These are all different demos that you can do that are slightly modified versions of what I did with Resnet earlier, but these all should be runnable. If you’re interested in this, then I would highly recommend you play around with that. Google Cloud has a set up right now where if you sign up, you get $300 in credits for free. All the demos I’ve done today can be done for $10 to $15, so at the very least, you can put $100 of their money into using their platform and at least learn how some of this stuff works.

Q: Are there any good PDFs or books or e-readers or background material… or is this something where no books are written? Is there bibliography for this talk?

A: There are a couple good books out there, I would say… From like, O’Reilly… but to be honest, if you run through this whole set of tutorials, pretty much everything I did today is in here somewhere. This is the MNIST model I did, here’s exactly how to do it.

Q: So you think the online documentation is enough?

A: Yeah. I would say so. This is resnet, I literally just copy and pasted this demo and ran it in the console.

Thank you all for coming!

Visit Google Cloud for some more helpful tutorials.